AI Voice-Cloning Scam: It was a regular Tuesday afternoon when Linda Harper, a 58-year-old woman from Phoenix, Arizona, received a call that made her blood run cold.

“Mom, it’s me. Please help me—I’ve been in an accident and they won’t let me leave unless I pay them!”

The trembling voice on the other end was unmistakably her daughter Emily’s—or so she thought.

Panicked and desperate, Linda followed the caller’s instructions, wired $15,000, and prayed she’d never hear such terror in her child’s voice again.

Only later, when she finally reached the real Emily—safe and sound at university—did the horrifying truth set in:

She’d been scammed.

By an AI voice-cloning scam so advanced, even a mother couldn’t tell the difference.

💡 AI Voice-Cloning Scam: What Is It?

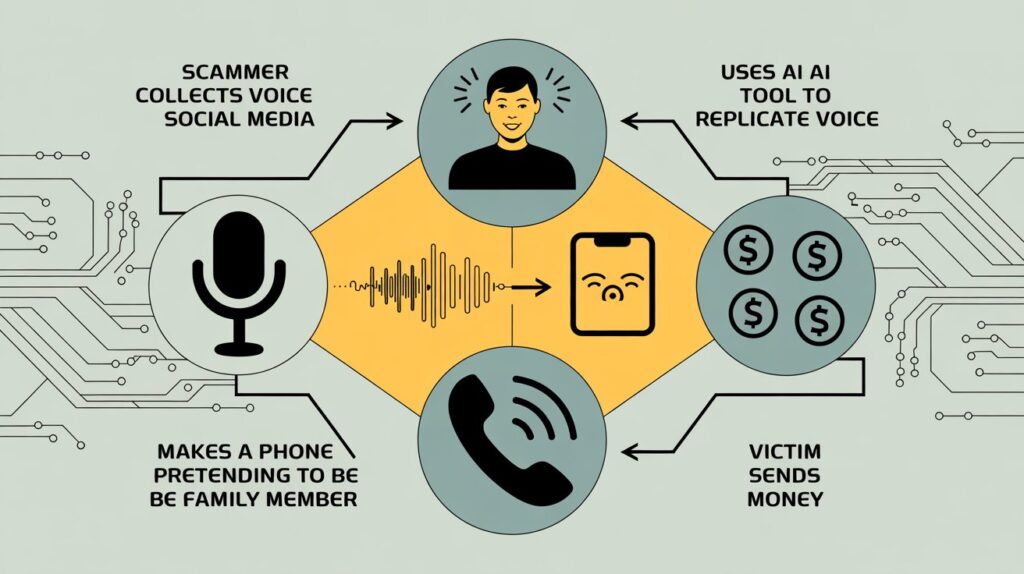

- A terrifying development of conventional phone fraud is the AI voice-cloning scam. With just a brief clip from social media, YouTube, podcasts, or even voicemail greetings, scammers can now mimic someone’s voice using deep learning technology.

- They call loved ones, including parents, partners, and even friends, claiming to be in danger and requesting immediate financial assistance after they have a convincing clone.

- In the middle of 2025, these scams have become much more common in the United States. This year alone has seen a 340% rise in voice-cloning fraud cases, according to the Federal Trade Commission (FTC).

📊 Why Is It Popular in the United States in July 2025?

- Affordable & Simple Tech Access: Voice AI tools are now available to anyone with internet access, and many of them are free or cost less than $20 per month.

- TikTok, Reels & YouTube: The popularity of video content means people’s voices are freely available online, ready for cloning.

- Emotional Triggers: Scammers rely on urgency, fear, and family bonds—making these scams more successful than email or text-based ones.

🏛️ AI Tools Used by Scammers

Many scammers use accessible tools such as:

- ElevenLabs: Offers ultra-realistic voice synthesis.

- Descript Overdub: Used to clone voices with a few minutes of audio.

- iSpeech or Resemble.ai: Often exploited for free trial voice cloning.

These tools aren’t inherently malicious but have been misused by cybercriminals due to their ease of use and lack of regulation.

🧐 Real Case: Linda Harper’s $15,000 Loss

Linda shared her story with local Arizona news to warn others. She said:

“The voice sounded exactly like Emily’s. She even used our family nickname ‘Mama Bear’. How could I have known it wasn’t her?”

The scammers told Linda her daughter had been involved in a hit-and-run and was being held unless a “legal processing fee” was paid.

Desperate and without thinking to call back, Linda wired the money to a crypto wallet address.

By the time she realised the truth, the wallet had been emptied and deactivated.

Linda’s trauma wasn’t just financial. She said:

“I cried for hours. I held myself responsible. It destroyed my faith in everything.

Public safety campaigns have since used her story to increase awareness of this scam.

⚠️ The Step-by-Step Methods Used by These Scammers

Information Gathering

They discover your voice through audio messages or social media sites.

AI-Powered Voice Cloning

Your exact voice, tone, and accent can be replicated using programs like Resemble.ai, ElevenLabs, or cloned voice software.

Written Family Call

Using sentimental expressions such as “I need money now,” “Please don’t call anyone,” or “I’m scared!”

Request an Instant Transfer

Scammers demand payment through wire transfers, prepaid cards, or cryptocurrency—all of which are difficult to track down or reverse.

The Vanishing Act

The con artist erases all evidence after the money is transferred, making them untraceable.

📈 Who Is Most in Danger?

- Parents of college students

- Older people who are not tech-savvy

- Public speakers or influencers

- Individuals with public social media accounts

Audio from YouTube channels, podcasts, and TikTok videos is frequently stolen by scammers, particularly when family dynamics are openly discussed.

✅ How to Guard Against AI Voice-Cloning Fraud

1. Establish a “safe word” for the family

Decide on a code that only your loved ones are aware of. Hang up and check if it’s not included in a crisis call.

2. Maintain the Privacy of Social Media Profiles

Posting private voice recordings or videos online should only be done when absolutely required.

3. Confirm via Video Chat or Phone Call

Always take a moment to give the individual a call. To find out their status if they don’t respond, get in touch with a loved one.

4. Avoid Emotional Behavior

Scammers put pressure on you to take quick action. Wait a minute. Real emergencies give you time to check.

5. Always Use Two-Factor Authentication

Make sure scammers cannot access your cloud apps, email, or phone in order to obtain private audio files.

6. Report Right Away

Notify your local authorities and the FTC at reportfraud.ftc.gov. You have a better chance of identifying the scam if you take action quickly.

🔎 What the Authorities Are Doing

The government of the United States is taking action.

The FCC suggested in July 2025 that voice calls produced by AI be classified as unlawful unless permission is acquired.

- AI companies like ElevenLabs now require voice print authorization before cloning.

- Legislators are drafting new bills under the “Voice Fraud Protection Act.”

Still, enforcement is struggling to keep up with tech innovation.

Public Service Campaigns

Major telecom providers like Verizon and T-Mobile have started sending alerts to customers regarding voice scams.

Schools and universities are also now educating students about how their voices can be weaponised.

💬 Psychological Impact on Victims

Victims of AI voice-cloning scams suffer more than financial loss.

The emotional trauma—especially after thinking a loved one was in danger—can cause anxiety, PTSD, and loss of trust.

Linda said it best:

“I feel betrayed by technology. It used my daughter’s voice to break me.”

Therapists now report an increase in patients who have been affected by digital manipulation scams.

🤓 Expert Opinions

“The realism of cloned voices is outpacing public awareness,”

says Dr. Kelsey James, a cybersecurity expert at MIT.

She believes voice authentication and biometric safeguards are the next steps in securing communication.

“This isn’t just a scam problem, it’s a psychological warfare issue,” says forensic psychologist Dr. Harold Whitmore.

📣 Final Thoughts: Awareness Is Your Superpower

The AI voice-cloning scam isn’t science fiction. It’s real, here, and more dangerous than ever.

But with awareness, skepticism, and some simple security steps, you can protect yourself and your loved ones.

Stay alert. Stay informed.

And always verify before you trust—even if it sounds like your child.

Read More Blogs – https://scamfreeworld.co.uk/latest-hmrc-tax-refund-scam-targeting-uk/